Introduction: The Inevitable Collision Course

Let's be honest. We're on a collision course.

On one hand, you have the explosive, world-changing power of artificial intelligence, a technology advancing at a rate that's hard to comprehend. On the other, you have the hard physical limits of energy. For the last few years, the AI world has lived in a state of blissful ignorance, acting as if the laws of thermodynamics were optional. That blissful ignorance is about to end, and it's going to end to the tune of a trillion-dollar energy bill.

The current industry response to this looming crisis is, frankly, unimaginative. We're chasing incremental gains: slightly more efficient GPUs, smarter data center cooling systems, better power usage effectiveness (PUE). These are good things. They are necessary. But they are not a strategy. They are tactical "local optimizations" that completely fail to address the systemic flaw in our thinking. It's like trying to solve a city-wide traffic apocalypse by convincing everyone to buy a car that gets two more miles per gallon. You're not fixing the problem; you're just delaying the inevitable gridlock by a few minutes.

This article isn't about those incremental gains. It's about the trillion-dollar blind spot in our strategy. The crisis we face isn't just an engineering challenge; it's a crisis of imagination. It’s a direct result of a profound misunderstanding of the problem we're trying to solve.

You see, we've become so obsessed with the tool—the AI model—that we've forgotten about the job it was hired to do.

By reframing this entire challenge through the rigorous lens of Jobs-to-be-Done (JTBD), we can move beyond the brute-force approach of "more power, bigger models" and unlock a new class of elegant, ultra-efficient solutions. This isn't about making the computation cheaper. It's about getting the user's job done with radically less computation in the first place.

This is a first-principles approach to solving AI's energy crisis. It’s time to stop building faster cars and start designing a better city.

The Anatomy of AI's Energy Bill: A Sobering Look at the Numbers

Before we deconstruct the problem, you need to appreciate its terrifying scale. The numbers aren't just big; they're growing at an exponential rate that makes Moore's Law look quaint. An AI model's energy appetite is driven by three main phases:

Data Management: Storing and moving the petabytes of data required to feed these models.

Training: The famously intensive process of teaching a foundational model. Training a single large model can consume a massive amount of energy (GPT-4 2023 = ~4,762 household years, Grok 4 2025 = ~29,524 household years). This is the cost that gets all the headlines.

Inference: The act of using the trained model to answer a query or perform a task. This is the silent killer.

While training costs are immense, they are a one-time (or infrequent) capital expenditure of energy. Inference, however, is a continuous operational expenditure. Every single time you ask an AI to generate text, write code, or create an image, you are spinning an electrical meter somewhere. As AI becomes embedded in trillions of daily transactions, the total energy cost of inference will dwarf the cost of training. This is the looming financial threat. This is where the trillion-dollar bill comes from.

Consider this: a top-tier foundational model can consume several kilowatt-hours for a single complex query. Now, imagine that level of consumption integrated into your search engine, your email client, your CRM, your car's navigation. The math becomes staggering. We're building a global infrastructure that is, by its very design, financially and environmentally unsustainable.

The industry's answer? "Don't worry, the next generation of chips will be 30% more efficient!"

That's not a solution. It's a rounding error in the face of exponential demand. To find a real solution, we have to stop looking at the hardware and start questioning the assumptions that led us here.

First-Principles Deconstruction: What 'Job' Are We Hiring AI to Do?

First-principles thinking is the process of breaking a problem down into its most basic, foundational truths. You strip away the assumptions, the industry dogma, and the "way things have always been done" until you're left with only what is undeniably true. From that solid new ground, you can build a better solution.

Let's apply this mental scalpel to the AI energy crisis.

The Method: Challenging What We "Know"

The process is simple but rigorous. We will identify the core assumptions that underpin our current approach to AI development and energy consumption. For each one, we will challenge it, asking "Why must this be true?" until we expose the flawed logic.

Assumption 1: The job is 'to execute a computational model'.

This is the most pervasive and dangerous assumption in the entire industry. We talk about "running models," "making queries," and "calling APIs." Our entire technical and business vocabulary is centered on the solution.

Refutation: This confuses the tool with the job. No customer, internal or external, wakes up in the morning with the goal of "executing a computational model." That's like saying a person with a headache has the job of "swallowing a pill." It's nonsense.

The real job is what precedes the model. A marketing manager's job isn't to run a "customer segmentation model"; it's to 'determine which customers are most likely to churn.' An oncologist's job isn't to "run a diagnostic model"; it's to 'identify the most effective treatment path for a patient.'

The AI model is just one possible—and as we've seen, incredibly inefficient—way to get that job done. By focusing on the model, we lock ourselves into making the model better, rather than finding a more elegant way to achieve the outcome.

Assumption 2: Innovation is defined by hardware and algorithmic efficiency.

Our industry's heroes are the chip designers and the algorithm wizards who squeeze out a few more percentage points of performance. This has created a culture that defines "innovation" in a dangerously narrow way.

Refutation: This hyper-focus on a single type of innovation is a strategic failure. A more robust framework is Doblin’s 10 Types of Innovation, which shows that true breakthroughs rarely come from just one area. The industry is obsessed with Product Performance (a faster chip, a better algorithm) while completely ignoring massive opportunities in:

Business Model: How can we change the way we charge for AI to incentivize efficiency?

Process: How can we redesign the workflow to get the job done with less need for computation?

Service: How can we deliver the outcome to the user in a way that feels seamless and "just happens"?

Focusing only on performance is like a Formula 1 team spending all its money on the engine while ignoring aerodynamics, pit stop strategy, and driver skill. You won't win the championship.

Assumption 3: Bigger data and larger models are axiomatically better.

The prevailing wisdom is a simple equation: More Parameters + More Data = Better AI. We're in a race to build ever-larger models, celebrating each new parameter count milestone as a victory for progress.

Refutation: This logic ignores the brutal law of diminishing returns. Past a certain point, the monumental increase in computational and energy cost to train and run these behemoth models yields only marginal, often imperceptible, gains in performance for a specific job. A model that is 0.5% more accurate at identifying cat pictures but costs 200% more energy to run is not a better solution; it's a wildly inefficient one.

We have to replace the question "Is this model statistically better?" with "Is this model meaningfully better at getting the job done, considering the total cost?" Most of the time, the answer will be a resounding no.

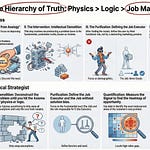

Synthesis: A New Foundation for AI Innovation

After stripping away the flawed assumptions, we're left with a set of powerful, foundational truths. Any sustainable and profitable AI strategy must be built on this new foundation.

Truth 1: The goal is to deliver the customer's desired outcome with the minimum necessary computation.

Truth 2: The value lies in the quality and timeliness of the insight, not the complexity of the model that generated it.

Truth 3: Energy optimization must be a systemic strategy that starts with the user's job, not a tactical problem confined to the data center.

This is our new starting point. It forces us to stop asking, "How do we power the model?" and start asking, "How do we get the job done?"

Architecting the Low-Energy Future: An Outcome-Driven Playbook

So, what does it look like to build solutions on this new foundation? It requires a shift from thinking like a computer scientist to thinking like a JTBD innovator. Here are some practical examples, ranging from what you can do today to what's coming next.

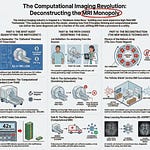

Part 1: Concepts Working Today (That Few Are Doing)

These aren't futuristic dreams; they are highly practical strategies that leading-edge teams are implementing right now to slash their computational and energy costs.

Radical Specialization: Stop using a sledgehammer to crack a nut. Instead of calling a massive, generalist foundational model for every task, build or use tiny, purpose-built models. A model designed only to do sentiment analysis on customer reviews will be orders of magnitude smaller, faster, and cheaper to run than a generalist model that can also write poetry and debug code.

Model Cascading: This is an intelligent routing system. A user's query first hits a very small, very cheap "triage" model. This model assesses the query's complexity. Can it be answered simply? If so, it answers it and the process stops. If it's more complex, it gets routed to a mid-tier model. Only the most complex, novel queries get passed to the giant, expensive foundational model. This is the 80/20 rule in action, potentially deflecting 80% of your queries from your most expensive energy-consuming resource.

Outcome-as-a-Service Business Models: This is the most powerful strategic shift. Stop charging customers per API call or per token. That model incentivizes more computation. Instead, change your business model to charge for the outcome.

Don't charge to "run a lead scoring model"; charge per qualified sales lead delivered.

Don't charge to "run a fraud detection model"; charge a percentage of the fraudulent transactions you prevent.

This completely flips the script. Now, your company is financially incentivized to find the most computationally cheap, energy-efficient way to deliver that outcome for the customer. Your R&D focus shifts from building bigger models to building smarter, leaner systems.

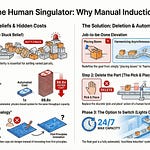

Part 2: Novel Concepts for the Next Generation

Looking ahead, we can imagine solutions that get a higher-level job done with even less visible effort. These concepts elevate the level of abstraction, solving problems before they're even explicitly asked.

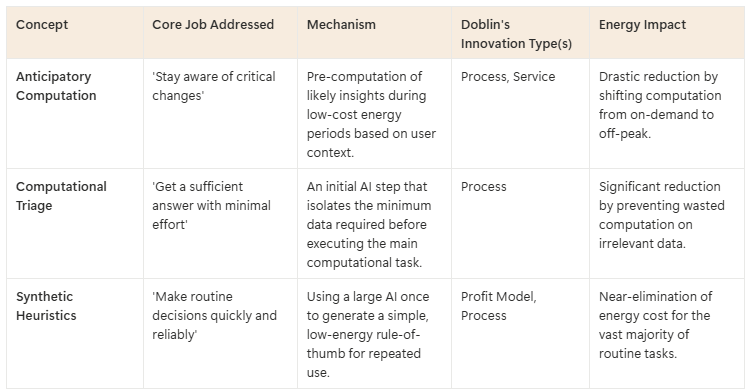

Anticipatory Computation: The most energy-intensive queries are often complex, ad-hoc questions from business users. What if the system could anticipate these needs? An AI system that understands a sales leader's context could analyze new sales data overnight (when energy costs are low) and pre-compute the answers to the five most likely questions that leader will have in the morning. When the leader arrives, the insights are already waiting in a dashboard. The user's job elevates from the difficult 'run an analysis to find sales trends' to the effortless 'stay aware of critical sales trends.' The high-energy, on-demand query is eliminated entirely.

Computational Triage: Before running any query, an intelligent system first determines the absolute minimum information needed to get the user's job done. Instead of analyzing an entire 100-page document to answer one question, it first identifies the three specific paragraphs that contain the likely answer and analyzes only those. This avoids massive amounts of wasted computational effort by focusing resources with surgical precision.

Synthetic Heuristics: A heuristic is a mental shortcut or a rule of thumb used for quick decision-making. We can use a large AI model once to analyze a complex system and generate a set of simple, fast, low-energy rules. For example, a massive model could analyze millions of logistics data points to conclude: "For 95% of deliveries under 50 miles, Shipper B is the cheapest option if the package weighs less than 5 pounds." This simple rule can now be used millions of times for routine decisions with virtually zero energy cost, effectively "amortizing" the one-time energy cost of the big model. The big model is reserved only for the 5% of novel, edge-case decisions.

Reference Table for Novel Concepts

Building an Uncopyable Moat with Systemic Innovation

Here's why this strategic shift is so powerful: it creates a competitive advantage that is incredibly difficult to copy.

Any competitor with enough money can go buy the latest, most powerful GPUs. A performance advantage based on hardware is temporary and expensive to maintain.

But a strategy built on the principles we've discussed is a system. It's a combination of multiple types of innovation from Doblin's framework:

You have a new Profit Model (Outcome-as-a-Service).

You have a new Process (Anticipatory Computation).

You have a new Service (Delivering pre-verified insights instead of a query tool).

This is all enabled by Product Performance (Specialized, efficient models).

When you weave these types of innovation together, you create a complex, interlocking system that delivers more value to the customer at a lower operating cost for you. A competitor can't just copy one piece; they have to replicate the entire strategic stack. This is how you build a lasting, uncopyable moat in the age of AI.

Conclusion: Shifting from a 'Compute' to an 'Outcome' Paradigm

The trillion-dollar energy problem is not an engineering challenge waiting for a silver-bullet chip. It's a strategic challenge waiting for a new way of thinking. It's a design problem.

For too long, we've operated under a "compute-centric" paradigm, where the goal is to build bigger models and find more power for them. This path leads to a financial and environmental dead end.

The future belongs to those who adopt an "outcome-centric" paradigm.

This requires a fundamental shift in how we build products, structure our teams, and design our business models. We must stop asking, "How can we make our models 10% more efficient?" and start asking the far more powerful question:

"What is the user's real Job-to-be-Done, and what is the most elegant, minimalist, and computationally cheap way to get it done for them?"

The company that answers that question won't just save money on their energy bill. They will define the next generation of artificial intelligence.

Follow me on 𝕏: https://x.com/mikeboysen

If you're interested in inventing the future as opposed to fiddling around the edges, feel free to contact me. My availability is limited.

Mike Boysen - www.pjtbd.com

De-Risk Your Next Big Idea

Masterclass: Heavily Discounted $67

My Blog: https://jtbd.one

Book an appointment: https://pjtbd.com/book-mike

Join our community: https://pjtbd.com/join